Last time we dove into the artificial intelligence (AI) pool we focused on what it is, its emergence and its history. While we touched on everything from its origins in Greek mythology and Jewish folklore, to more contemporary examples in the form of Mary Shelley’s Frankenstein, our main focus was on the period between the 1950s and today, which were marked by alternating golden years and what we now call the AI winters.

We also covered the three eras of AI that spanned the 60 or so years from the inception of modern AI to the present: neural networks (1950s – 1970s), machine learning (1980s – 2010s) and deep learning (present day), and the four types of AI: reactive machines, limited memory, theory of mind (we’re not here yet) and self-awareness (we’re definitely not here yet).

And now we stray from technical and move toward the theoretical as we talk about the implications of AI. For example, is it dangerous? Will killer robots rise and enslave their human creators? Is automation going to wipe our job force? Only time will tell, but for today we theorize.

Is AI Dangerous?

“Computers can, in theory, emulate human intelligence, and exceed it… Success in creating effective AI, could be the biggest event in the history of our civilization. Or the worst. We just don’t know. So we cannot know if we will be infinitely helped by AI, or ignored by it and side-lined, or conceivably destroyed by it.” – Stephen Hawking

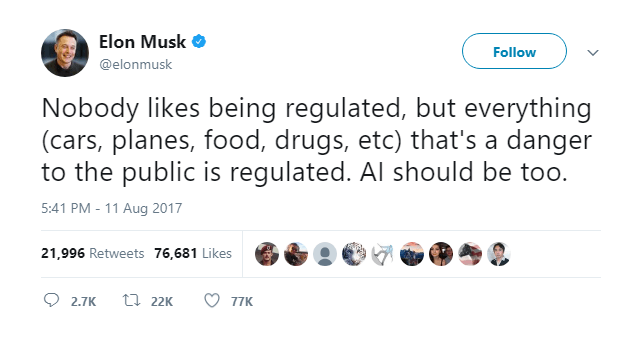

AI has the potential to completely transform and revolutionize society. The problem comes from uncertainty — will it serve to benefit or harm us? We just don’t know, which is why many industry leaders, apart from Stephen Hawking, are showing apprehension as we move forward in AI research. For example, Elon Musk, business magnate, inventor, founder of SpaceX and co-founder of Tesla Inc., shares Hawking’s concerns over AI, and even considers it more of a threat than the apparent nuclear capabilities of North Korea.

Musk has even pushed for the proactive regulation of AI because by the time we are reactive in AI regulation, it’s too late.

Regulation is a funny thing in the sense that we don’t actually take action until catastrophe strikes and something that could have been avoided happens. But because of AI’s inherent nature and unknown possibilities, the same thing just doesn’t hold. And considering Elon Musk’s line of work and relationship with AI and autonomous machines, we’d be smart to take heed.

When we consider the evolution of human intelligence, things get even murkier. For humans, our progress is limited by our biology; the same doesn’t hold for machines. Machines can be programmed with information that would take a human a lifetime to learn, and then some. We also have a general idea of how we got to where we are — we’ve adapted to our environment and changing circumstances, but again, the same doesn’t hold for machines.

We may eventually create an AI program with superintelligence that can not only improve, but also replicate itself (think of the Replicators in Stargate SG-1) in a recursive self-improvement cycle. The emergent intelligence explosion could, theoretically, displace us as Earth’s top dog and place machines in the top of the metaphorical food chain. Even worse, considering how we tend to treat each other and the environment, a sentient AI program could see us as a threat and take actions to ameliorate what it sees as a threat to the planet (as we’ve seen in countless Sci Fi movies).

The fact is that no matter how much we learn, we’ll never fully understand what actual AI entails. Like Pandora’s Box, we may regret our curiosity, and just because our intentions may be good and we want AI’s impact on society to be positive and beneficial, doesn’t mean that that’s what’s going to happen. Think of a genie in a lamp and what happens when you wish something. You may, for example, wish for peace on Earth. The problem is, who’s to say what ‘peace on Earth’ means? What if it means no humans, or no sentient beings? In that case, Earth would be peaceful because there would be no living beings that cause discord.

Moving back to AI, unless we explicitly align our goals with theirs in every single instance, we have no way of controlling their actions as they go about accomplishing what they’re programmed to do. If we were to, let’s say, task an AI automobile with getting us from point A to point B as fast as possible, it may ignore traffic laws and drive so erratically that we become injured in the process. To curtail this and other related issues, we would need to think of every variation and interpretation of what we’re programming, and act to prevent calamity.

The Danger of Automation

Like Musk, Bill Gates agrees that there are dangers associated with artificial intelligence, but his immediate concerns relate to the job world and automation:

“I am in the camp that is concerned about super intelligence,” Gates wrote. “First the machines will do a lot of jobs for us and not be super intelligent. That should be positive if we manage it well. A few decades after that though the intelligence is strong enough to be a concern. I agree with Elon Musk and some others on this and don’t understand why some people are not concerned.”

At the rate that it’s improving, automation can take over jobs that humans currently hold. For example, McKinsey & Company released a report concerning the automation of 2,000+ work-related activities from more than 800 occupations in the United States, and found the following:

1. Highly Susceptible to Automation

- Predictable physical work: 78% of time spent can be automated

- Welding and soldering on an assembly line, food preparation, packaging goods, etc.

- Data processing: 69% of time spent can be automated

- Data collection: 64% of time spent can be automated

2. Less Susceptible to Automation

- Unpredictable physical work: 25% of time spent can be automated

- Construction, forestry, raising outdoor animals, etc.

- Stakeholder interaction: 20% of time spent can be automated.

3. Least Susceptible to Automation

- Applying expertise: 18% of time spent can be automated

- Coding, copywriting, etc.

- Managing others: 9% of time spent can be automated

Until Next Time

For now, the only danger AI poses is in the form of automation as jobs go from humans to machines. Yes, we have robots like Sophia that are making headlines left and right, but as advanced as it (she?) is, it still pales in comparison to what truly artificial intelligence will bring. The dangers of AI, as such, shall remain theoretical until proven otherwise.

Tell us your thoughts in the comments